Introduction to CPU caches

How the CPU cache affects software performance

What is CPU cache?

The majority of modern CPUs have a cache that is significantly faster than main memory. Its main purpose is to store data that is frequently accessed by the CPU on the chip itself, so the CPU doesn't have to go through the slow process of reading data from the main memory.

So, between our instantly fast CPU registers and external main memory, we have one large shared CPU cache:

The capacity of the cache is much smaller than the capacity of the memory. On the majority of CPUs, it is usually below 50 MB. But it has lower latency and significantly higher bandwidth.

Multi-core CPUs also have multiple levels of cache. Some caches are shared between multiple cores, while others are local only to a single CPU core. For example, the Apple M1 CPU has 2 levels of cache: one L2 cache that is shared between all cores, and an L1 cache for each core. On the other hand, the Intel Core i7-9700K has 3 levels of cache, with the L3 cache being global. The cache that is shared between all cores is also referred to as the "last level cache" or LLC for short.

Caches of different levels have varying capacities. L2 cache is smaller than L3, while L1 is smaller than L2. Smaller caches (like L1) have higher bandwidth and lower latency. LLC is usually largest one being shared between all CPU and therefore the slowest one.

Just to have an understanding of the size of different caches, lets’s look at Intel Core i9-12900K specs (all numbers are related to performance cores):

L1 cache: 80 KB

L2 cache: 1280 KB

L3 cache: 30 MB

As you can see L1 cache is very small compared to L3 cache, but it can provide you data on the same speed as registers. L2 is a compromise between two.

But how fast the CPU cache is?

From tests that I’ve performed on my M1 CPU (which only has 2 levels of cache), the difference in the memory throughput for different sizes of data is following:

As you can see, the difference is noticeable. In my particular test, the memory throughput doubles if the data is able to fit in the L1 cache.

However, fitting data into CPU caches might be quite challenging due to the fact that we don’t have any control over what gets to the cache, and the cache itself is quite small. Though we will try anyway. But before doing that, we should review how data is stored in the cache - this knowledge will become quite handy later.

Cache lines

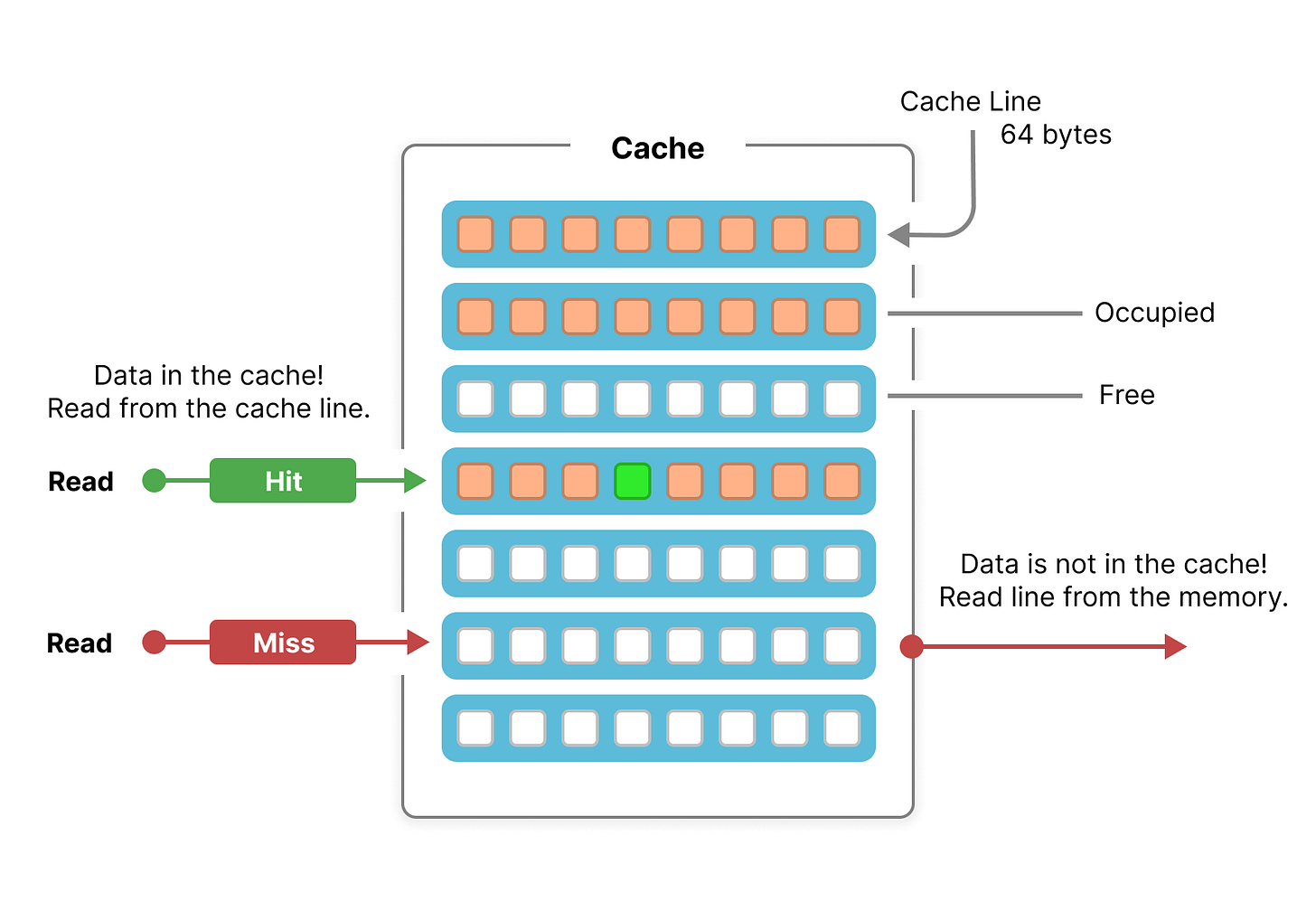

A cache line is the smallest unit of data that the CPU can work with in the cache. To rephrase it: the CPU cannot load only 1 byte of data into the cache. Every load in your program will always result in load of at least one cache line into the cache.

A cache line is typically 64 bytes in size. This means that if you load 4 bytes of data from main memory (for example, just one int), the CPU will load the entire 64 bytes of data into the cache. If your integer is located at the beginning of the cache line, it means that you can read other 60 bytes of data (or 15 integers) essentially for free because they will already be in the CPU cache.

Every time you load data and it happens to be in the CPU cache, it is referred to as a "cache hit." If the data is not in the CPU cache and needs to be fetched from main memory (which is relatively slow), it is referred to as a "cache miss."

There are utilities and profilers available that can provide precise information on the number of cache hits and misses that occurred while running your program. We will explore such tools later.

Which data gets to the CPU cache?

Programmers usually only have control on what is stored in registers and memory. CPU cache on another hand is fully controlled by the CPU itself.

Frequently used data

The general goal of CPU cache is to keep data which is accessed more frequently. So if a program accesses some data in the memory very often, this data in theory should stay in the CPU cache (as long as data is small enough to fit into the cache in the first place).

Data you read linearly

One additional use of CPU cache is caching data, that will be accessed next by the program, in advance. This is what pre-fetcher does. It looks at memory access pattern of your program and tries to predict which data will be loaded next, and puts it in the cache.

Let’s try to imagine what is like to predict what the program will read next from the memory so we can “pre-fetch” it in advance and put it into the CPU cache.

One program has loaded 64 bytes of data by accessing every 4 bytes in some memory region. So it seems like this program is reading some kind of an array where data is placed sequently. Let’s help to improve performance of that program by using the maximum memory bandwidth to “pre-fetch” the next portion of the array into CPU cache. Now, when the program loads the next 4 bytes from the array, that data is already in the cache.

But suppose we have another program which just jumps randomly from one memory address to another. Worse than that, this program accesses the region of memory which is larger than the whole CPU cache. In this case it simply impossible to decide what data should loaded into the cache next. And loading the whole region of memory into the cache will be simply impossible. So every data load by that program will result in cache miss.

How to get data into the cache?

In the next article we will look at how to write code which takes advantage of the CPU cache. But for now, you can just remember simple recommendations (we will test every one of them later):

Make sure that your data is as small as possible.

If doesn’t fit into L1 cache, try to split your data and process it on different cores so every core will access smaller amount of data that will fit into core-local L1 cache.

Make sure that your data at least fits into the last level cache.

Read data in predictable manner. Linear reads are the best for pre-fetcher.

Example: Linked-list where elements are placed randomly in the memory - the worst case scenario. Simply switching to an array or improving memory layout of the linked list will make you program faster.

Pack data your data to fit into a cache line.

Data that is packed to fit within one cache line will be loaded into the cache together. As a result, every part of that data will already be in the cache as soon as you load just a portion of it.

How this affects my code?

In the next article we’ll write a lot of code which will use different memory access patterns and we’ll measure how well it performs. Based on that hopefully you will get some more clear and practical understanding on how CPU cache can affect performance of your software.

Things to watch and read on the topic

Here’s a list of links to articles and videos that can help you learn more about CPU caches: